Using JavaScript to generate a SQL Script

It was a bleak Tuesday morning and the slow of Slack channels were quiet, as if they had been abandoned. Suddenly, the universally recognizable whoosh pop-pi-pop sound blares from your MacBook speakers and your heart skips a beat. Your gaze catches the bright red 1 beside a private chat with your manager. Your hand shaking, you hover over the chat, and for a brief moment even consider changing your status to 🍔 Lunch, but the gravity of responsibility pushes your finger down and opens the message.

We need to update our system's schools data – Manager

Alright, I'll stop being so dramatic..

This was a coming change my team and I were aware of for weeks before receiving this message. Our team is working on building a complex student data management system (SDMS) and part of the system relies on a list of schools that help drive some of the control flow in the data.

Why are we updating our schools list?

Our current school list had been developed over a decade in an old system we are sun setting in the near future. Users would add new schools to the list as needed. Over time this list grew to be a mishmash of ~350 schools where 10-15% were either incorrectly spelled, a duplicate with a slight variation, or a 1 to 1 duplicate. The old system wasn't exactly fool proof.

In our new SDMS, we aren't allowing users to input school names, instead we're going to provide an up-to-date list that will have 99% of schools (have to leave out 1% to cover our butts if we miss any 🙂). By taking control of the list, we're hoping to avoid the issues that arose in the last system. We also have a plan for a moderated system to add newer schools as they come up. This plan will allow for us to regulate the data and ensure the validity and integrity of the data as the new SDMS grows. Luckily for us, Stats Canada has already done an extensive collection of schools in Canada. Stats Canada document their data collection process, and explain their methodologies quite thoroughly.

The problem..

Our database stores School and SchoolBoard records in a many to one relationship.

This was a decision I made a long time ago to normalize school board data in our database. That way instead of storing the string "Toronto District School Board" hundreds of times, we store it once as a SchoolBoard and create a relationship to a School using the primary key.

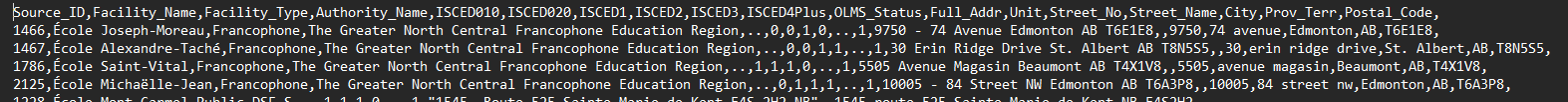

The dataset provided by Stats Canada isn't normalized in this way. Every representation of a school board is repeatedly recorded as a string.

The picture is a small representation of the ~19,000 lines of data, but the problem is becoming clearer, right?

I need to turn this sloppy csv data into a SQL insert statement for our database. The sheer size of the dataset meant I was going to need to automate this somehow. Usually, excel is a great tool for handling data and turning it into a series of insert statements. Here's a Stack Overflow discussion on the topic. However, due to the relationship I needed to create in the statement, setting id's of school boards onto school records, excel did not feel like the right tool. There's also a small relationship between Provinces (another reference table we use) and schools, which complicates this task even more.

Choosing the right tool

I settled on creating the SQL script using JavaScript. I considered C#, but this will be a one off program and won't require any maintainability in the future. I decided to trade the strongly typed safety net of C# for speed of development and flexibility of JavaScript. Since the dataset wasn't millions of records, I didn't need the multi-threading that C# provides.

Solution attempt 1...

Since the School table can have a relationship to a SchoolBoard record (can because it's a nullable foreign key), I decided to handle that piece of the task first.

First thing I wanted to do was find all distinct school boards in the dataset and give them some unique identifier (the unique identifier will come in handy later). I created a simple function that looked through the dataset and created a hashmap that used the school boards index in the list as the value and the name of the school board as the key. The index would act as a unique value for each school board in the map. I couldn't use the school board names are some had french accented characters.

const getDistinctSchoolBoards = (fileRows) => {

const schoolBoards = new Map();

for (let i = 1; i < fileRows.length; i++) {

// csv package to parse csv strings aptly named csv-string

const rowData = csv.parse(fileRows[i])[0];

let schoolBoard;

// at row 980 the csv file moves the schoolboard to a different column

// don't ask me how long it took me to figure this out...

if (i <= 980) {

schoolBoard = rowData[3];

} else {

schoolBoard = rowData[4]

}

schoolBoards.set(schoolBoard, i);

}

return schoolBoards;

}A function that returns a hashmap of distinct school boards

Okay, great. Now I have every unique school board in the list with a unique way to identify each one. The unique identity is important to me because I want to declare several variables in the SQL script, and I'll need to create a variable for every school board where I'll store the newly generated primary key to use as the foreign key in the school table.

I suppose I could have mentioned this earlier, but I used the node file system tools to handle loading the .csv file, and writing the .txt file containing the SQL script. I felt I could load the data file into memory since it wasn't that large of a file. Otherwise I would have considered a file stream.

This is how I did it

const { readFile, writeFile } = require('node:fs/promises');

const main = async () => {

const schoolsData = await readFile('./schools.txt', {encoding: 'utf-8'});

// other processing code...

await writeFile(`schools_sql.txt`, data, {encoding: 'utf-8'});

}I felt I had everything in place to actually start creating the script. I added to the start of the script a series of SELECT statements for the province id's required to be put into every school record. This was simple and I did this by hand. The function merely returns the declarations of variables and the SELECT statement for each variable to populate the variable with the id value.

Next, I had to create the School Board INSERT statements. Remember, I also need to capture the newly created primary key of every unique school board and store it in a variable. Luckily with T-SQL (our database engine is Microsoft SQL Server), there is the built in system function, @@IDENTITY which returns the last identity value created.

An example usage would be something like this

DECLARE @SCHOOLBOARD BIGINT;

INSERT INTO [dbo].[SchoolBoard] ([CreatedDate], [Name])

VALUES (GETUTCDATE(), 'Toronto District School Board')

SELECT @SCHOOLBOARD = @@IDENTITY`;

My thinking was that I could just do this for every school board, and therefore I would have every unique school board's primary key as a variable in the script.

Here's the function I wrote to append this part of the script

const appendSchoolBoardInsertSql = (data) => {

let result = ''

for (const [key, value] of data.entries()) {

result += `DECLARE @SCHOOLBOARD${value} BIGINT;\nINSERT INTO [dbo].[SchoolBoard] ([CreatedDate], [Name], [TransactionUser]) VALUES (GETUTCDATE(), '${key.trim().replaceAll("'", "''")}', 'System')\nSELECT @SCHOOLBOARD${value} = @@IDENTITY\n`;

}

return result;

}My data parameter in this function is the unique school board data I created. So I use the built-in Object.entries() function to get an array of arrays, where the key-value pairs are stores, and iterate over that list.

Here's a simple example to help people understand what's happening

const object = { 0: 'a', 1: 'b', 2: 'c' };

const entries = object.entries(); // can also use Object.entries(object)

console.log(entires);

// [ [0, 'a'], [1, 'b'], [2, 'c'] ]For brevity, I use array destructuring in my for loop, since I know the Object.entries() function returns to me an array at every instance of an object-key pair. In this case, the key is the name of the school board, and the value is the index, or unique identifier for that school board name.

You might notice the transformation being done on the school board name, key.trim().replaceAll("'", "''"). I do this to escape single quotations so that they do not break the text entry within the INSERT statements. For example, a school board name like 'Conseil des écoles publiques de l'Est de l'Ontario' would cause issues as a string in T-SQL.

Other than that, the function is quite simple. It declares the school board variable adding the index to the end of the variable name as a way to ensure no duplicate variables are declared. Then the function creates the INSERT statement with values. Finally, the primary key of that soon-to-be inserted data is stored into the recently declared variable using the @@IDENTITY function. Using the line break \n is an easy way to see the separation of the different parts of the string within the code.

Now that the school board inserts have been appended to the script, I can move on to the schools. This part doesn't require much processing. I can just loop through the data in the .csv file and take the values I need to create my INSERT statement.

const appendSchoolInsertSql = (rows, schoolBoards) => {

for (let i = 1; i < rows.length - 1; i++) {

const rowData = csv.parse(rows[i]);

if (!rowData.length) {

continue;

}

// again.. data structure changes at 980 for some reason..

const first980Index = i <= 980 ? 0 : 1;

// there are many other fields that we capture

// but I removed for brevity

const name = rowData[1 + first980Index].replaceAll("'", "''");

const schoolBoard = rowData[3 + (first980 ? 0 : 1)];

const province = replaceDots(rowData[16 + (first980Index ? 0 : 1)]);

const schoolBoardExists = schoolBoards.get(schoolBoard);

const insert = `INSERT INTO [dbo].[School] ([CreatedDate], [ProvinceId], [SchoolBoardId], [Name])\n`;

const values = `VALUES (GETUTCDATE(), ${province ? '@' + province.toUpperCase() : 'NULL'}, ${schoolBoardExists ? '@SCHOOLBOARD' + schoolBoardExists : 'NULL'}, '${name}');\n`;

}

}I parse the row of data using the aforementioned csv-parser package. There were instances where the row of data was just a set of empty comma separated values. To account for this, I added a simple if check, and continue to the next iteration of the loop if there is no data in that row. I capture all the values in variables, and then use those variables to construct my INSERT statement.

The only two interesting pieces here are the schoolBoardExists variable, and the province variable. The Map object in JavaScript gives us access to the handy .get() function. This allows me to search for the school board key using the school board name. If the school board is found, schoolBoardExists will contain the value of that key-object pair. In this case, it's the unique index of that school board. The only time this value will be falsy is when the school board name is not found. So I use a ternary operator to construct the school board insert. If the value exists, I append the unique index to @SCHOOLBOARD variable otherwise it is NULL.

Earlier I quickly mentioned the province variables. I specifically named those variables to align with how they were named in the schools data provided by Stats Canada. Stats Canada used the province 'code' to denote provinces. For example, Ontario = ON, Yukon = YT, Alberta = AB. So, if the province exists on the row of data, I take the code, prepend the @ symbol, and call .toUpperCase() on the province code, just to ensure it aligns with the way I've named the province variables at the start of the script.

Once that function is complete, I add a final COMMIT to the end of the large SQL string, and the code is set to write the file. In the end, the file was ~40,000 lines of SQL. I ran this script against my local database, and while it took upwards of 8 minutes to complete, it worked beautifully.

You might be wondering then, why is this section is entitled Solution attempt 1..

The failure of Solution attempt 1

Our organization, as I imagine many do, host our applications in many environments on the cloud. So the particular database that we're writing this script for exists in a 'dev', 'stage' and 'production' environment. Each of the environments serve their own purpose. The 'dev' environment is meant for average people like me to go into and ensure that the deployment is working as intended and do some other testing, whatever that may be. The 'stage' environment is meant for product managers/other managers to review the application/feature and give the green-light for deployment. 'Production' is what you expect it to be, the wild world of real users.

As a smaller company, we try to save money where we can. One of these ways includes picking the lowest pricing tiers for our 'dev' environment database. What I didn't consider when creating Solution 1 was how this poor $5/month database was going to handle getting slapped in the face with a 40k line SQL script.

The script would run for about 3-4 minutes and then spit out an error

The service has encountered an error processing your request. Please try again.

Error code 701.

Msg 0, Level 20, State 0, Line 16

A severe error occurred on the current command. The results, if any, should be discarded.This Microsoft learning article explains the error in great detail, but the TLDR is that the database is running out of memory running the script.

Time to rethink the solution.

Winner winner, chicken dinner

Before I get to the success story, I tried several other solutions along the way that don't require great detail. I removed the transaction quality of my SQL script and instead inserted several GO statements, but ran into the same issue. I tried one INSERT line and the values were input as a comma separated list. This turned up a separate limitation of SQL in that only 10,000 values can be entered at a time. I imagined that even if I broke the script into 10k chunks, I'd run into the same memory issue.

Alas, it seemed like my only option was to break the SQL script file into several, smaller files. I thought about some solutions, and came up with the one that I thought changed the code the least, but provided me with the outcome I desired.

Again, because this JavaScript code isn't going to be one I maintain or even really use again, I wasn't too concerned with the most optimal solution when refactoring my code. I was looking for the simplest and quickest solution that allowed me to move on from this task and get to more meaningful work on my task list.

Getting the distinct list of school boards stays the same. Where I began my changes was in the creation of the school board insert script. The problem was that I needed the defined school board variables that captured the primary key of the school board to be in the same file as any school that relies on that variable.

Here is how I handled this problem

const batches = {noSchoolBoard: {id: 0, schoolBoardInsert: '', schools: []}};

const appendSchoolBoardInsertSql = (data) => {

for (const [key, value] of data.entries()) {

const string = `INSERT INTO [dbo].[SchoolBoard] ([CreatedDate], [Name]) VALUES (GETUTCDATE(), '${key.trim().replaceAll("'", "''")}')\nSELECT @SCHOOLBOARD${value} = @@IDENTITY\n`;

batches[key] = {id: value, schoolBoardInsert: string, schools: []}

}

}I loop through the values the same way, I even create the SQL string the same way. However, now I create a variable called batches where I can store the school board key with a value object that contains the id (index), the school board insert string, and the schools associated with this school board. Grouping the schools in this array would allow me to ensure that the school board variable declared would be with the schools that depend on that variable.

Changing the function that handles the INSERT statement for schools required a small change as well to accommodate this new batches variable.

Here is the refactored function

const appendSchoolInsertSql = (rows, schoolBoards) => {

const rowData = csv.parse(rows[i]);

if (!rowData.length) {

continue;

}

// again.. data structure changes at 980 for some reason..

const first980Index = i <= 980 ? 0 : 1;

// there are many other fields that we capture

// but I removed for brevity

const name = rowData[1 + first980Index].replaceAll("'", "''");

const schoolBoard = rowData[3 + (first980 ? 0 : 1)];

const province = replaceDots(rowData[16 + (first980Index ? 0 : 1)]);

const schoolBoardExists = schoolBoards.get(schoolBoard);

const insert = `INSERT INTO [dbo].[School] ([CreatedDate], [ProvinceId], [SchoolBoardId], [Name])\n`;

const values = `VALUES (GETUTCDATE(), ${province ? '@' + province.toUpperCase() : 'NULL'}, ${schoolBoardExists ? '@SCHOOLBOARD' + schoolBoardExists : 'NULL'}, '${name}');\n`;

if (schoolBoardExists) {

batches[schoolBoard].schools.push(insert + values);

} else {

batches.noSchoolBoard.schools.push(insert + values);

}

}

}My only addition is lines 22-25. Now I use schoolBoardExists to add the string to the schools array on the school board key in the batches object. Since some schools in the dataset don't list a school board, I have the default noSchoolBoard key to store these schools.

Everything is almost where it needs to be. Now I want to take this batches variable and create an array that has each set of strings required for that particular SQL script file.

Here is the function that does what I mentioned above

const insertBatches = [];

const createInsertStatementBatches = () => {

let currentBatch = 0;

for (schoolBoard in batches) {

if(insertBatches[currentBatch] && insertBatches[currentBatch].length > 4000) {

currentBatch++;

}

if (schoolBoard !== 'noSchoolBoard') {

const declare = `DECLARE @SCHOOLBOARD${batches[schoolBoard].id} BIGINT;\n`;

if (insertBatches[currentBatch]) {

insertBatches[currentBatch] = [...insertBatches[currentBatch], declare, batches[schoolBoard].schoolBoardInsert, ...batches[schoolBoard].schools]

} else {

insertBatches[currentBatch] = [declare, batches[schoolBoard].schoolBoardInsert, ...batches[schoolBoard].schools]

}

} else {

if (insertBatches[currentBatch]) {

insertBatches[currentBatch] = [...insertBatches[currentBatch], ...batches[schoolBoard].schools]

} else {

insertBatches[currentBatch] = [...batches[schoolBoard].schools]

}

}

}

}I create the variable insertBatches to store the list of arrays of strings. I use a for..in loop to iterate through the keys of the batches variable and do a couple checks.

The first check is if there is an element for the current batch in the list, and if the length of that element is 4000. This is the number I decided to use as a limit per how many schools should be in each file. This doesn't mean the SQL script will be 4000 lines, but it was a random number that ended up working for this case. If both of those are true, then I increase the currentBatch counter and move to the next space in the insertBatches array.

Then I check if the school board is an actual school board or my default 'noSchoolBoard' key I created for schools that had no school boards. This check is just to avoid having to create the variable declaration to store the primary key from the created school board record.

The final check is if the batch list exists within the insertBatches array. If it does, we simply add to it, if it doesn't we create it.

We're getting closer.. now that I have the batches grouped in this list, I can finally create the files.

const createFilesFromBatches = async () => {

let counter = 1;

// I know I could've used a c-style loop..

// my brain was just wanted this to be done, so here we are..

// using a random counter variable.

for await (const batch of insertBatches) {

const startOfString = 'BEGIN TRANSACTION\n' + appendProvinceVariables();

const endOfString = 'COMMIT';

const data = new Uint8Array(Buffer.from(startOfString + batch.join('') + endOfString));

await writeFile(`sql${counter}.txt`, data, {encoding: 'utf-8'});

counter++;

}

}The call to appendProvinceVariables() simply adds the province variables to every file so that they are available to school insert statements that rely on them.

I loop through each batch in the insertBatch array, convert the inner array into a big string, and convert the string into a byte array. That byte array is what I used to create the file. In the end this method created 4 files with the largest having ~16k lines. The largest file contained the schools without school boards, as there were a ton of them (cmon Stats Canada), but luckily, the $5 database chugged it's way through it and was able to insert all the data. The other 3 files ran smoothly averaging ~8k lines each, and just like that I was done.

A lesson learned

Never over feed your $5 database, crazy things can happen.

Other solutions

I'm curious to know how others would handle this problem. Are there easier ways to do it? Let me know in the comments below!